About Me

Welcome to my website! :) I am a postdoc research fellow at the Kempner Institute at Harvard University. My research aims to make machine learning methods more efficient and accessible. Most recently, I'm particularly interested in understanding how various inductive biases (from data usage, training algorithms, and architecture) affect training efficiency and inference cost. I'm broadly interested in bridging theoretical and empirical/scientific understanding of machine learning, often drawing insights from synthetic "sandbox" settings (write-up).

Before Kempner, I was a postdoc fellow at the Simons Institute, participating in Special Year of Language Models and Modern Paradigm of Generalization. I got my PhD from the Machine Learning Department of Carnegie Mellon University, where I was fortunate to be co-advised by Prof. Andrej Risteski and Prof. Pradeep Ravikumar. Previously, I was a master student in the Stanford Vision and Learning Lab, where I worked on video understanding and its applications to healthcare under the supervision of Prof. Fei-Fei Li, Prof. Juan Carlos Niebles, and Prof. Serena Yeung.

I will be on the academic job market in Spring 2027 for positions starting in Fall 2027 (together with Tim Hsieh). Please keep us in mind! :)

Research

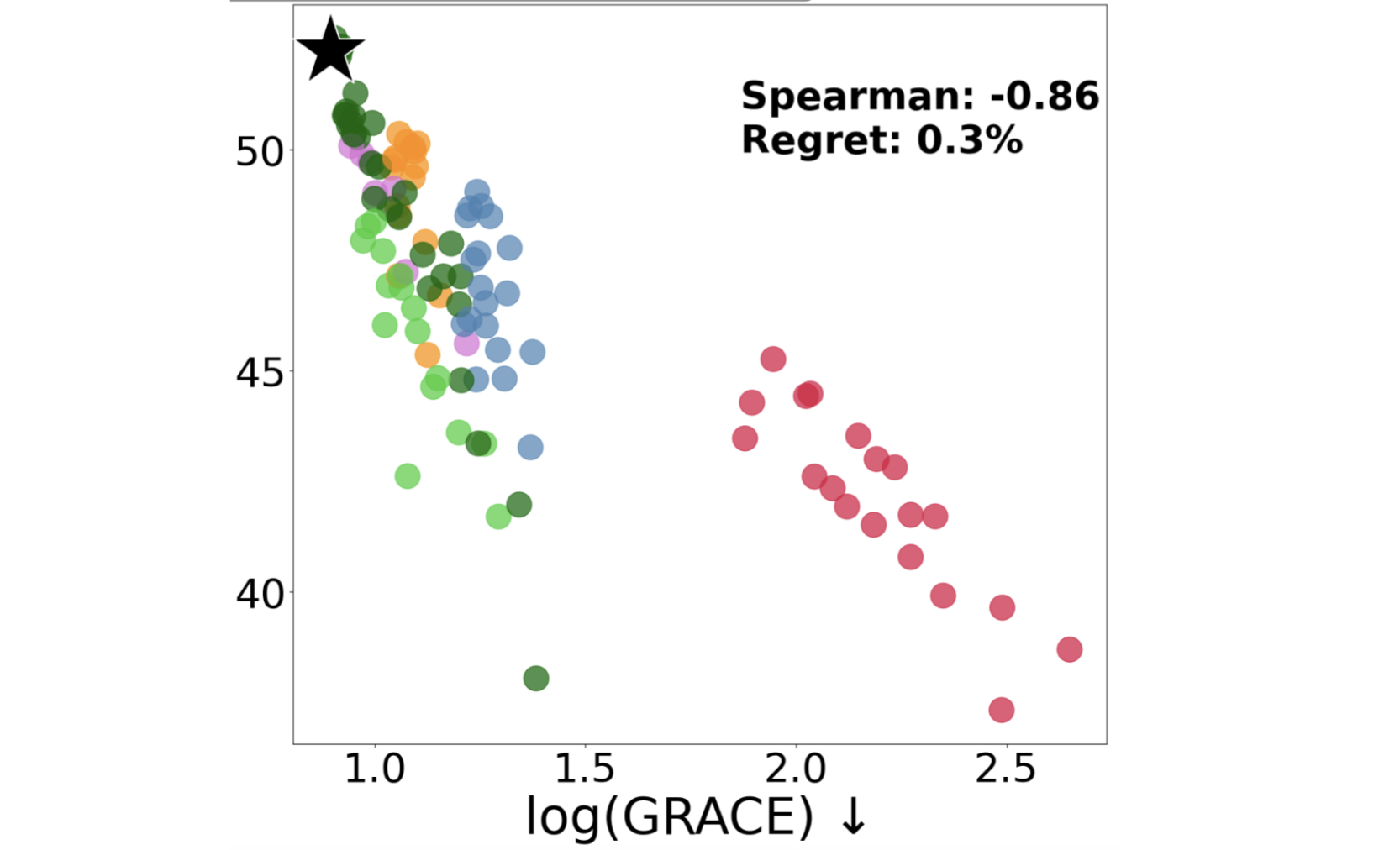

In Good GRACES: Principled Teacher Selection for Knowledge Distillation

Abhishek Panigrahi, Bingbin Liu, Sadhika Malladi, Sham M. Kakade, Surbhi Goel

In submission

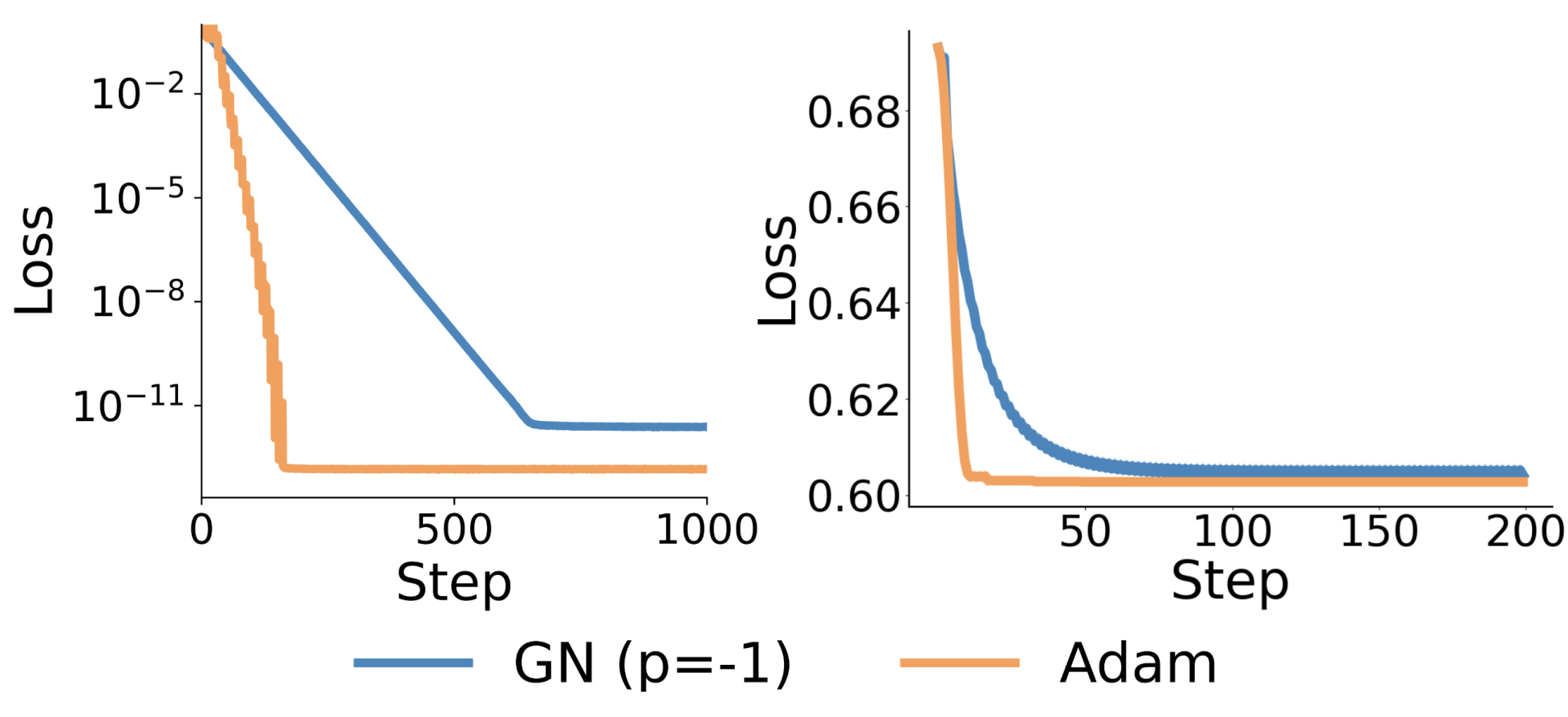

Adam or Gauss-Newton? A Comparative Study In Terms of Basis Alignment and SGD Noise

Bingbin Liu, Rachit Bansal, Depen Morwani, Nikhil Vyas, David Alvarez-Melis, Sham M. Kakade

In submission

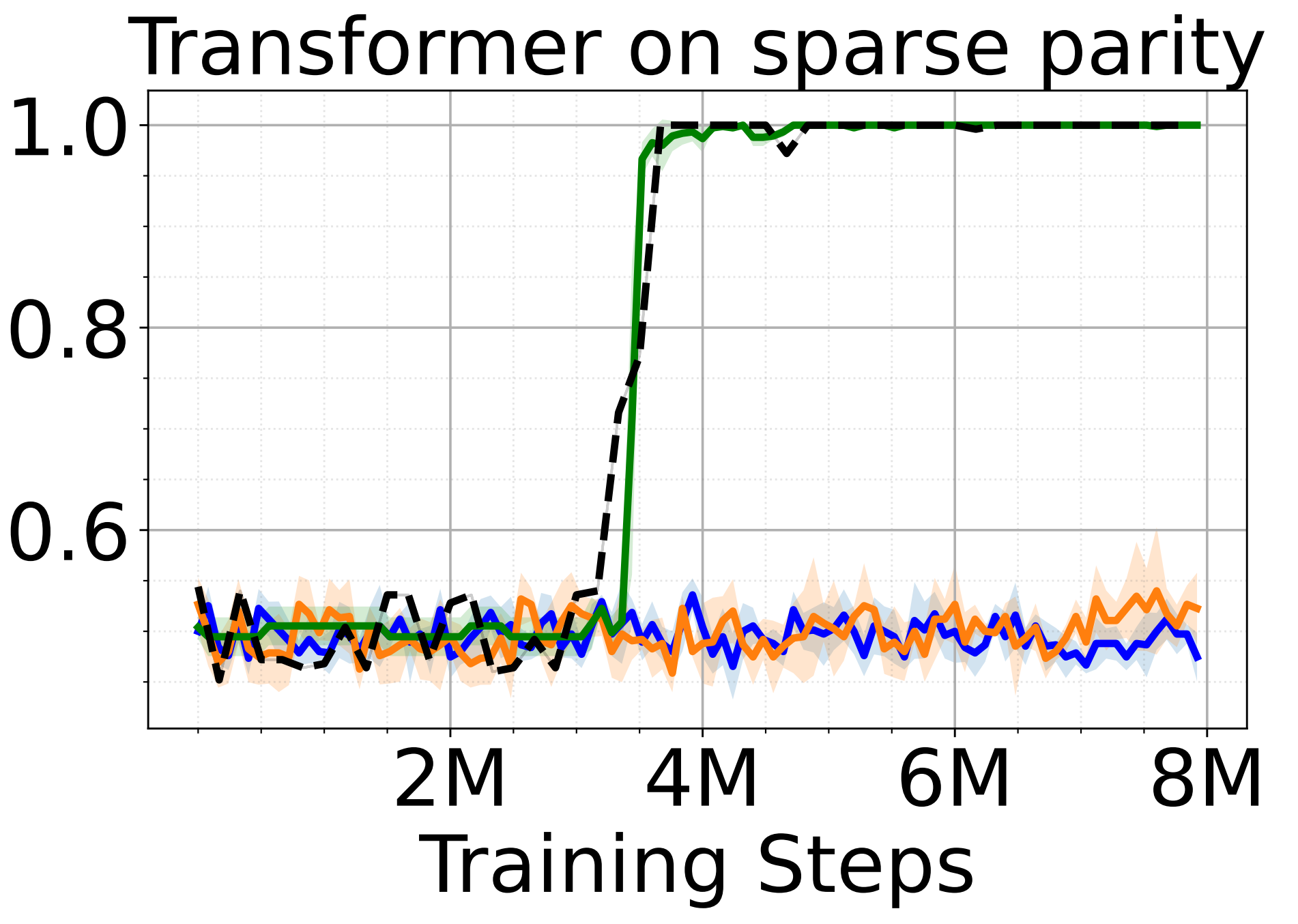

Progressive distillation induces an implicit curriculum

Abhishek Panigrahi*, Bingbin Liu*, Sadhika Malladi, Andrej Risteski, Surbhi Goel

ICLR25 (Oral)

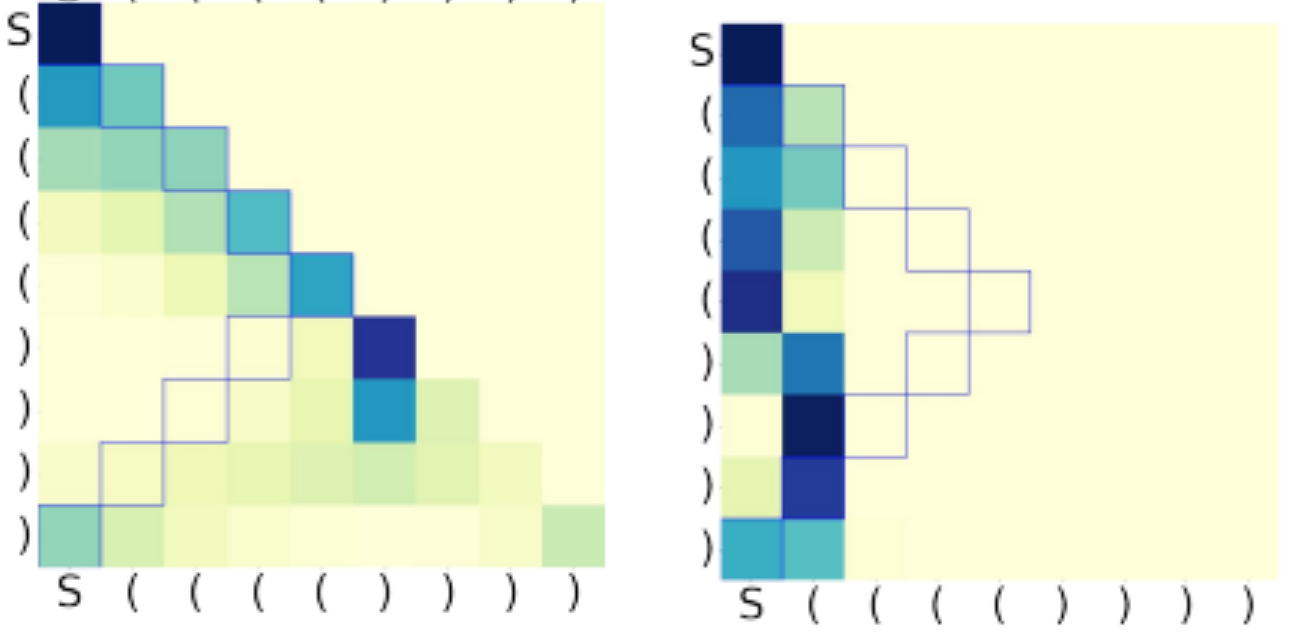

(Un)interpretability of Transformers: a case study with bounded Dyck grammars

Kaiyue Wen, Yuchen Li, Bingbin Liu, Andrej Risteski

NeurIPS 2023

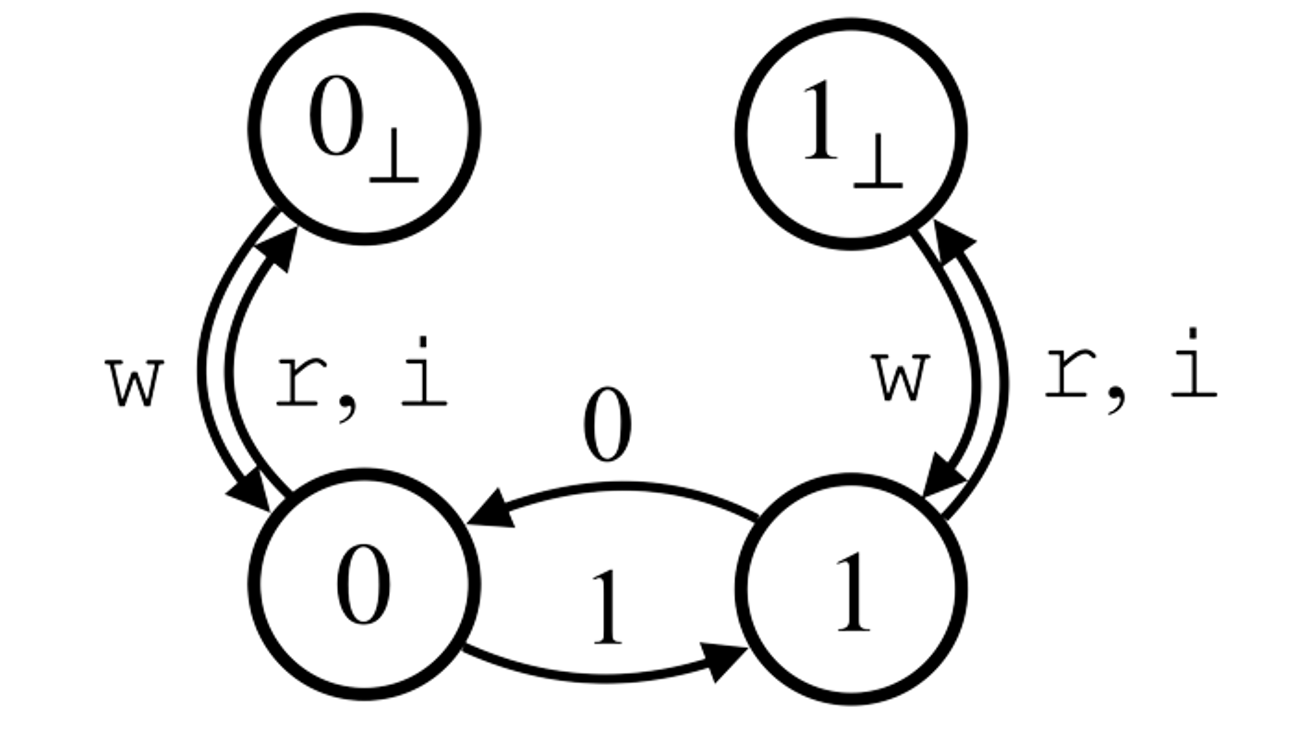

Exposing Attention Glitches with Flip-Flop Language Modeling

Bingbin Liu, Jordan T. Ash, Surbhi Goel, Akshay Krishnamurthy, Cyril Zhang

NeurIPS 2023 (Spotlight)

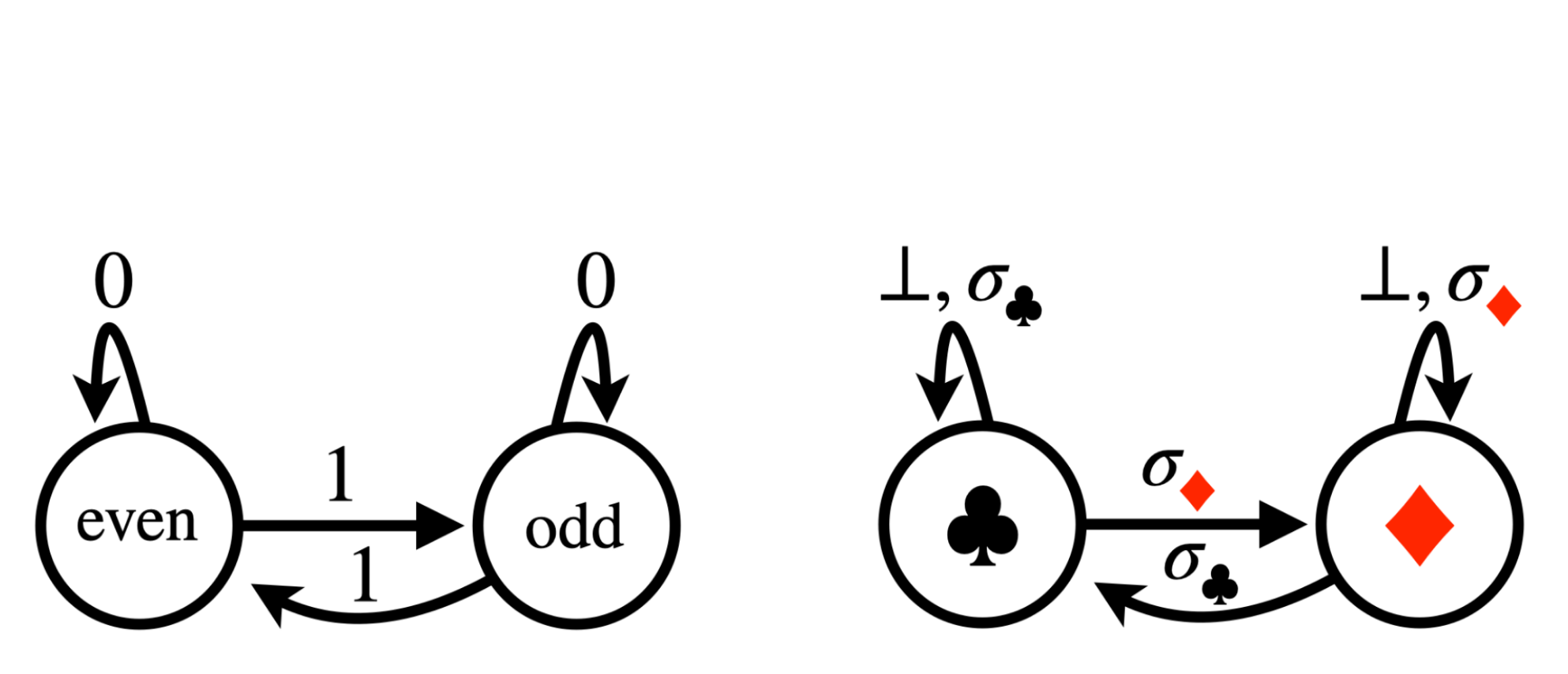

Transformers Learn Shortcuts to Automata

Bingbin Liu, Jordan T. Ash, Surbhi Goel, Akshay Krishnamurthy, Cyril Zhang

ICLR 2023 (Oral)

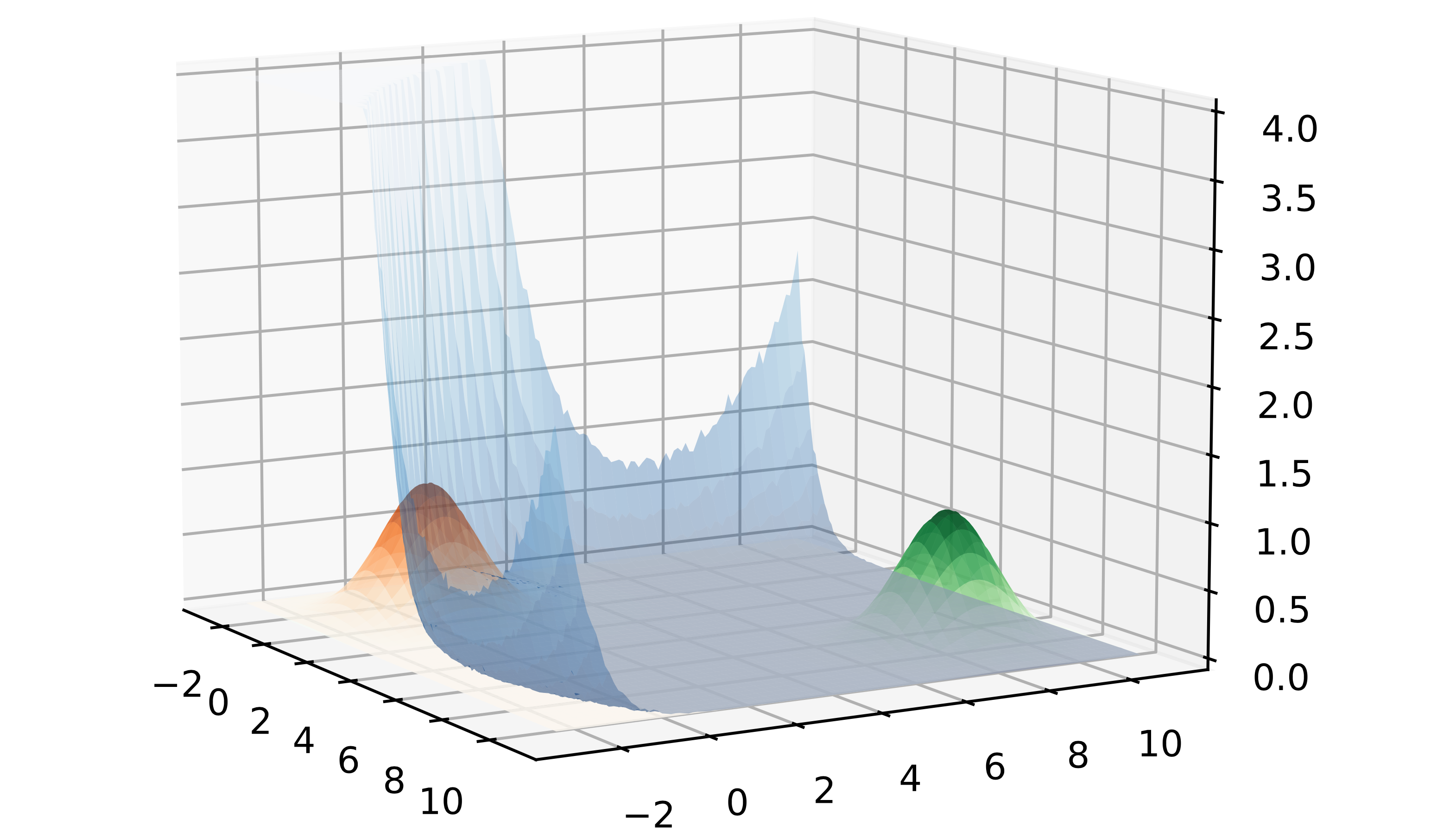

Masked prediction tasks: a parameter identifiability view

Bingbin Liu, Daniel Hsu, Pradeep Ravikumar, Andrej Risteski

NeurIPS 2022

Analyzing and improving the optimization landscape of noise-contrastive estimation

Bingbin Liu, Elan Rosenfeld, Pradeep Ravikumar, Andrej Risteski

ICLR 2022 (Spotlight)

Contrastive learning of strong-mixing continuous-time stochastic processes

Bingbin Liu, Pradeep Ravikumar, Andrej Risteski

AISTATS 2021

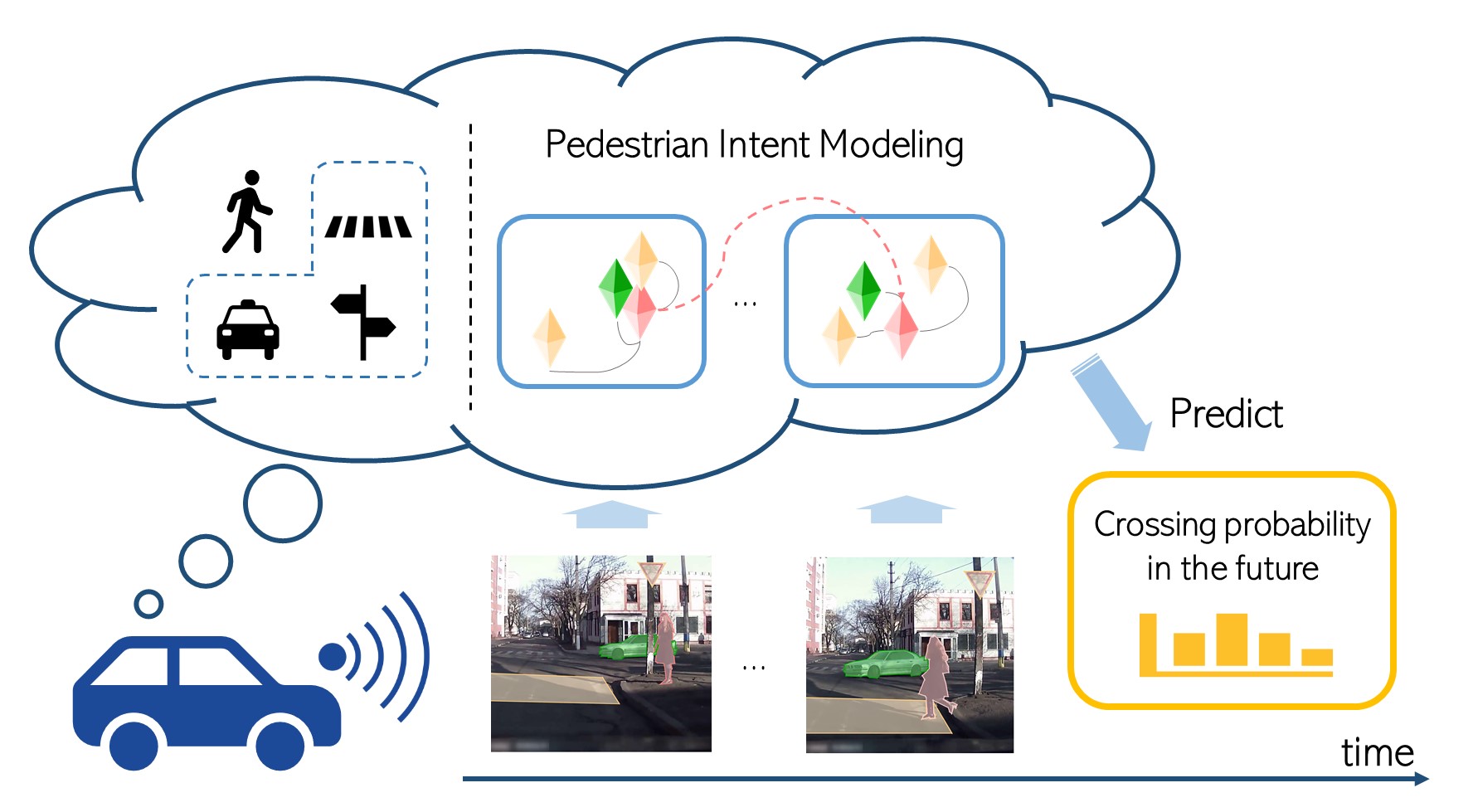

Spatiotemporal Relationship Reasoning for Pedestrian Intent Prediction

Bingbin Liu, Ehsan Adeli, Zhangjie Cao, Kuan-Hui Lee, Abhijeet Shenoi, Adrien Gaidon, Juan Carlos Niebles

IEEE-RAL & ICRA 2020

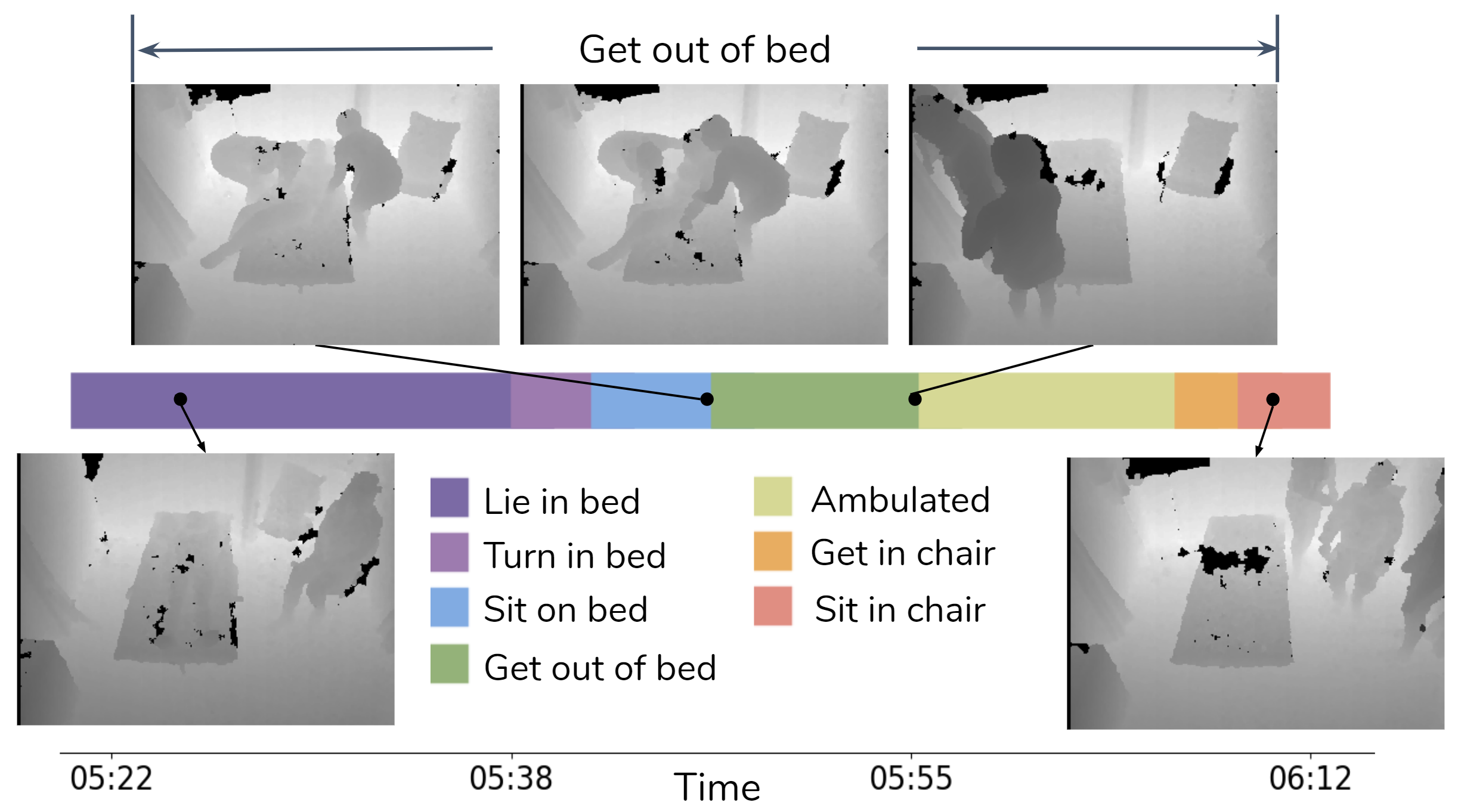

A Computer Vision System to Detect Bedside Patient Mobilization

Serena Yeung*, Francesca Rinaldo*, Jeffrey Jopling, Bingbin Liu, Rishab Mehra, Lance Downing, Michelle Guo, Gabriel Bianconi, Alexandre Alahi, Julia Lee, Brandi Campbell, Kayla Deru, William Beninati, Li Fei-Fei, Arnold Milstein.

Nature Digital Medicine, 2019

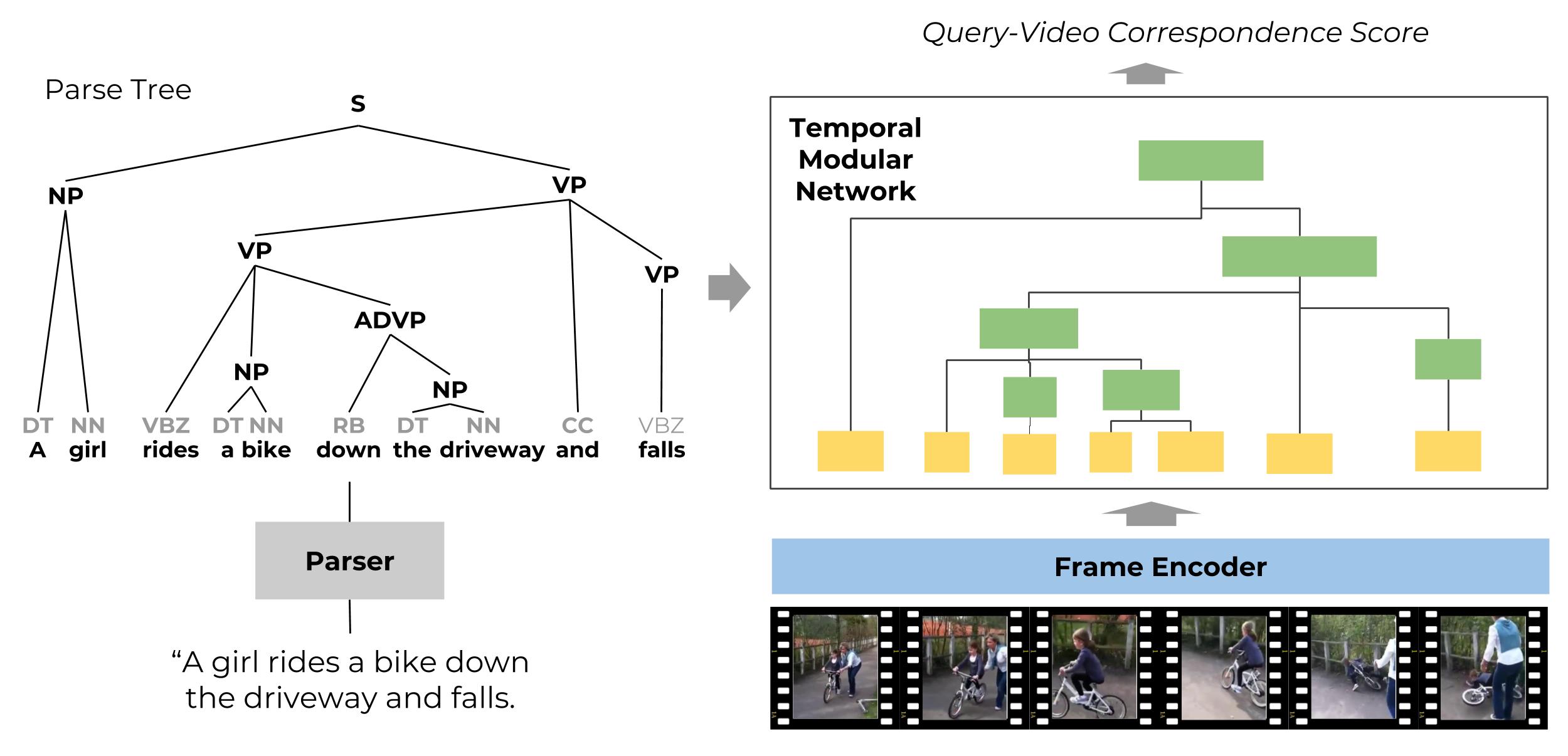

Temporal Modular Networks for Retrieving Complex Compositional Activities in Videos

Bingbin Liu, Serena Yeung, Edward Chou, De-An Huang, Li Fei-Fei, Juan Carlos Niebles

ECCV 2018

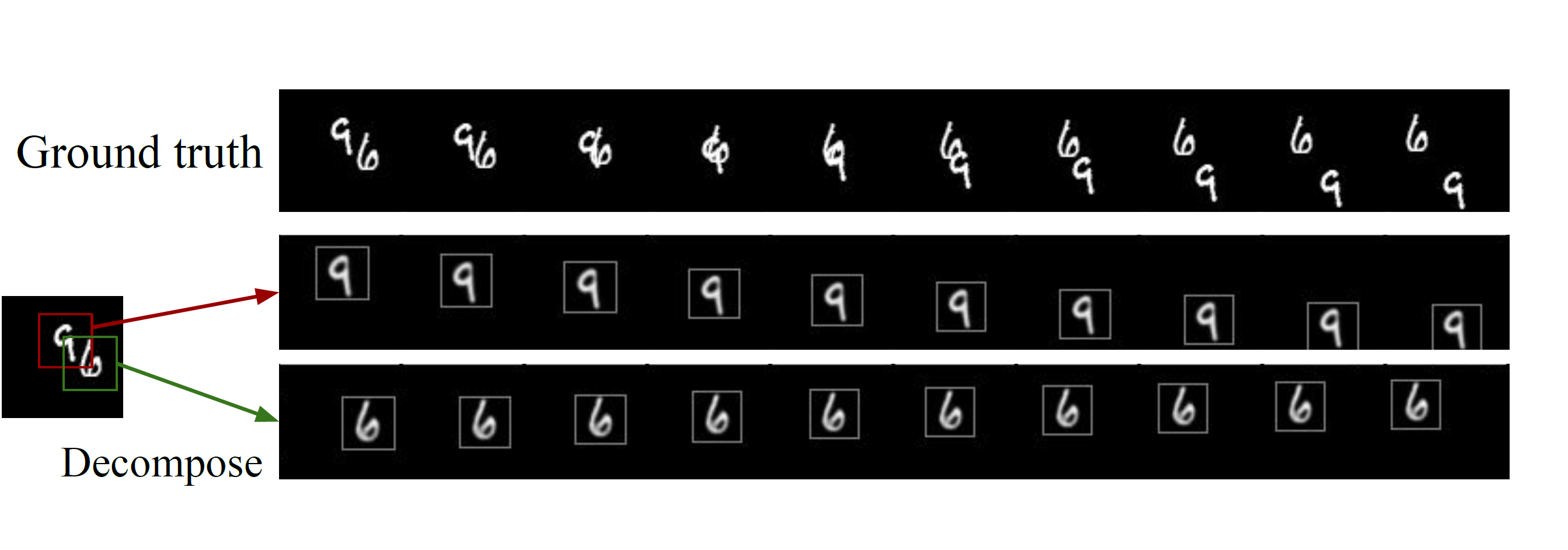

Learning to Decompose and Disentangle Representations for Video Prediction

Jun-Ting Hsieh, Bingbin Liu, De-An Huang, Li Fei-Fei, Juan Carlos Niebles

NeurIPS 2018

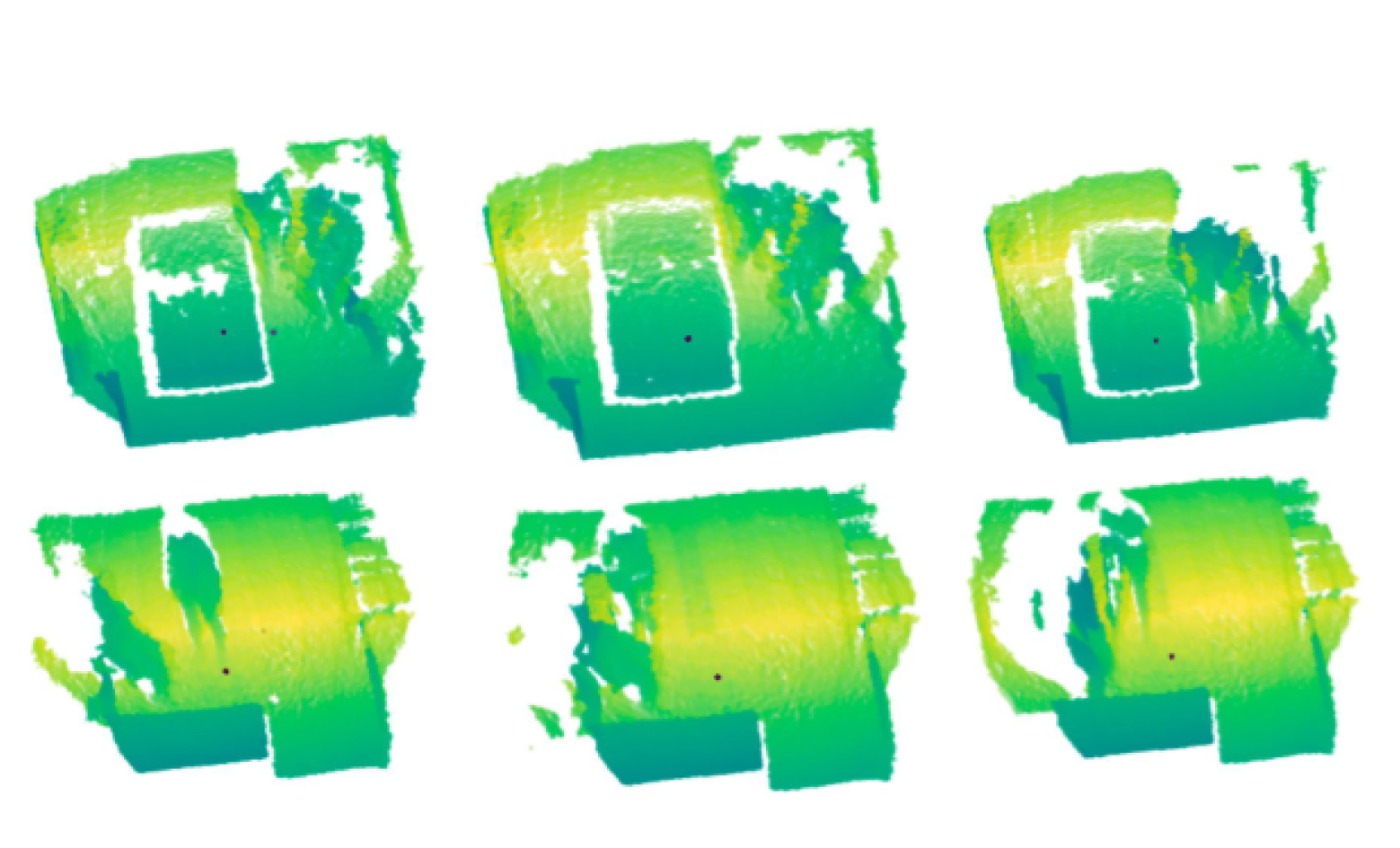

3D Point Cloud-Based Visual Prediction of ICU Mobility Care Activities

Bingbin Liu*, Michelle Guo*, Edward Chou, Rishab Mehra, Serena Yeung, N. Lance Downing, Francesca Salipur, Jeffrey Jopling, Brandi Campbell, Kayla Deru, William Beninati, Arnold Milstein

MLHC 2018

Activities

Talks

-

NeurIPS24 Tutorial: Sandbox for the Blackbox: How LLMs Learn Structured Data?

Tutorial at NeurIPS 2024, together with Ashok Vardhan Makkuva and Jason Lee.

[NeurIPS page | Project page] -

Guiding machine learning design with insights from simple testbeds

Talk at the Cornell Junior Theorists' Workshop at Cornell University, Spring 2024. [Slides]

-

Learning from the Right Teacher in Knowledge Distillation

Talk at Columbia University hosted by Daniel Hsu, Fall 2025. [Slides] -

Improving training with progressive distillation

Remote talks hosted by Yusu Wang and 6th Youth in High-Dimensions at ICTP, 2025. [Slides]

-

Capabilities and limitations of Transformer in sequential reasoning

Talk at the Special Year of Language Models and Transformers program at the Simons Institute, Fall 2024. [Slides] -

Thinking Fast with Transformers: algorithmic reasoning with shortcuts

Remote talks hosted by the Math Machine Learning seminar MPI MIS + UCLA, Chris Maddison, and FAI-Seminar, 2023. [Slides] -

Exposing Attention Glitches with Flip-Flop Language Modeling

Remote talk at Women in AI & Robotics, Spring 2025. [Slides] -

Transformers Learn Shortcuts to Automata

Remote talks hosted by Formal Languages and Neural Networks Seminar and Jacob Steinhardt, 2022-2023. [Slides]

Organized Events

- LeT-All Fall 2025 Mentorship Workshop

- Methods and Opportunities at Small Scale (MOSS) workshop at ICML 2025 (Project page)

- Mathematics of Modern Machine Learning (M3L) workshop series at NeurIPS 2024 and NeurIPS 2023.

- Parallel vs Recurrent Models reading group at Simons Institute, Fall 2024.

Misc

I want to keep a list of good advice I've received from various friends and mentors. Please let me know if you have recommendations (especially since I'm doing a horrible job at gender balance here)!

- The most important points: Value (and protect) your time, the power of writing, and clarity of thought. Be kind.

- PhD can be intense and/or rewarding. It's a very personal journey but you're very likely not alone. In case you want to hear from some fellow PhD students/graduates, here's some advice by Karl Stratos, Kevin Gimpel, Matt Might, Ben Chugg.

- Research advice by R. W. Hamming, Terence Tao, Patrick Winston (how to speak), Ankur Moitra (how to do theory), Aaditya Ramdas (how to read papers), John N. Tsitsiklis (how to write technical contents). LeT-All also has nice advice on various topics from multiple years!

- Why theory/math? Talk by Tim Gowers.

Random. Some photos of places I've lived and furry friends I met along the way.

A Connections game for our wedding.

(More to be continued :))